Apple’s $2499 16" beast vs. a completely free environment - Which is better for TensorFlow?

Apple completely changed the laptop industry for the second year in a row. New 14" and 16" is what professional users have been waiting for since the base M1 release back in 2020. Does it deliver? Definitely, but can an entirely free Google Colab outperform it? That’s what we’ll answer today.

Want to see how the base M1 from 2020 compares with Google Colab for data science? I got you covered:

MacBook M1 vs. Google Colab for Data Science - Unexpected Results

Today we’ll make two data science benchmarks using TensorFlow and compare MacBook Pro M1 Pro and Google Colab. We’ll ignore the obvious benefits of having a lightning-fast laptop and focus only on the model training speed.

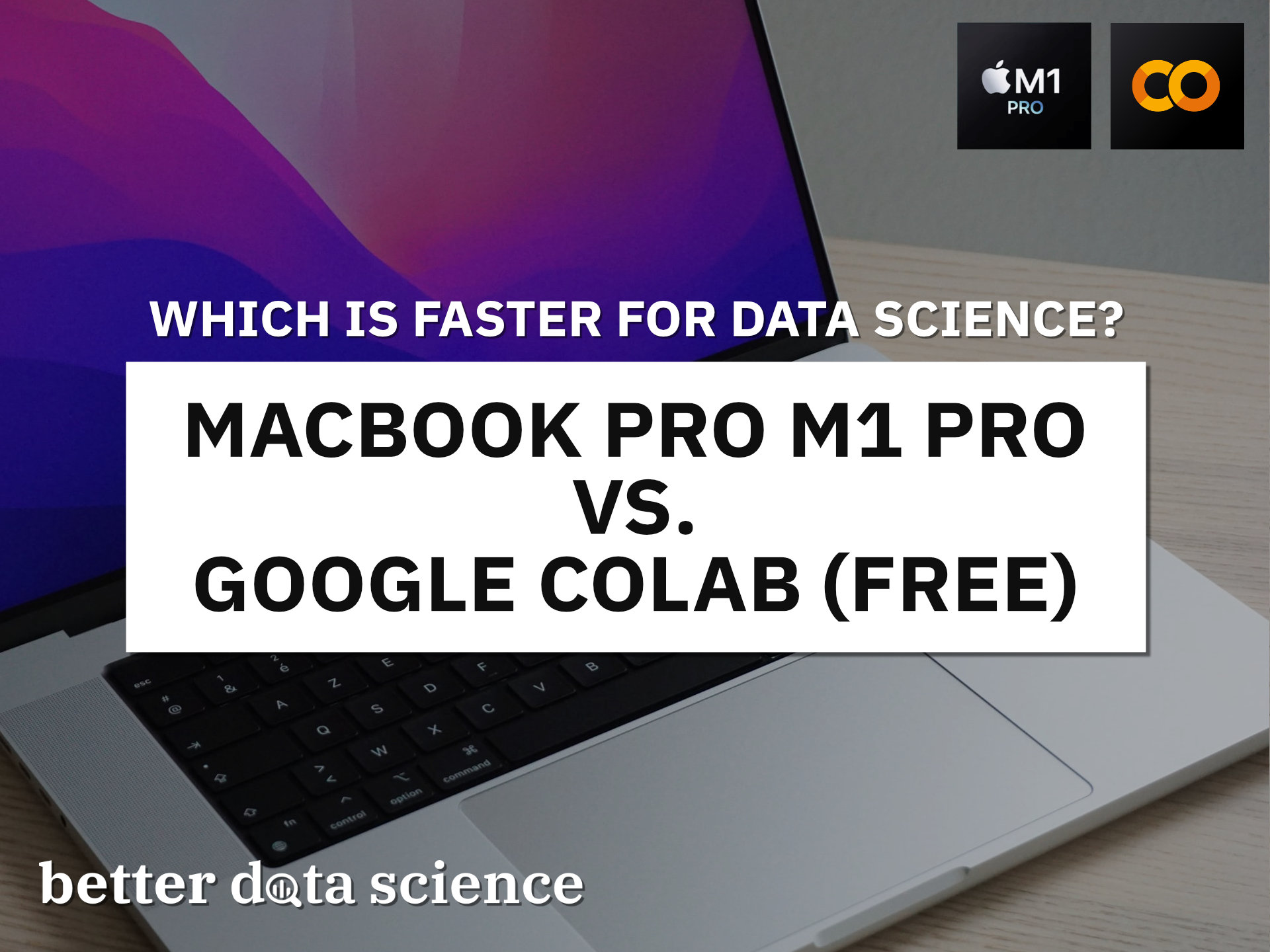

Here’s a table summarizing hardware specifications between the two:

Image 1 - Hardware specification comparison (image by author)

M1 Pro has more RAM and a more recent CPU - but it’s the GPU we care about. The one from Apple I tested has 16 cores, but you can configure it with 24 or 32. The Colab environment that was assigned to me is completely random. You’re likely to get a different one, so the benchmark results may vary.

Don’t feel like reading? Watch my video instead:

MacBook M1 Pro vs. Google Colab - Data Science Benchmark Setup

You’ll need TensorFlow installed if you’re following along. Here’s an entire article dedicated to installing TensorFlow on Apple M1:

How To Install TensorFlow 2.7 on MacBook Pro M1 Pro With Ease

Also, you’ll need an image dataset. I’ve used the Dogs vs. Cats dataset from Kaggle, which is licensed under the Creative Commons License. Long story short, you can use it for free.

Refer to the following article for detailed instructions on how to organize and preprocess it:

TensorFlow for Image Classification - Top 3 Prerequisites for Deep Learning Projects

We’ll do two tests today:

- TensorFlow with a custom model architecture - Uses two convolutional blocks described in my CNN article.

- TensorFlow with transfer learning - Uses VGG-16 pretrained network to classify images.

Let’s go over the code used in the tests.

Custom TensorFlow Model - The Code

I’ve split this test into two parts - a model with and without data augmentation. Use only a single pair of train_datagen and valid_datagen at a time:

import os

import warnings

from datetime import datetime

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

warnings.filterwarnings('ignore')

import numpy as np

import tensorflow as tf

tf.random.set_seed(42)

# COLAB ONLY

from google.colab import drive

drive.mount('/content/drive')

####################

# 1. Data loading

####################

# USED ON A TEST WITHOUT DATA AUGMENTATION

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

valid_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

# USED ON A TEST WITH DATA AUGMENTATION

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0,

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest'

)

valid_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

train_data = train_datagen.flow_from_directory(

directory='data/train/',

target_size=(224, 224),

class_mode='categorical',

batch_size=64,

seed=42

)

valid_data = valid_datagen.flow_from_directory(

directory='data/validation/',

target_size=(224, 224),

class_mode='categorical',

batch_size=64,

seed=42

)

####################

# 2. Model

####################

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(filters=32, kernel_size=(3, 3), input_shape=(224, 224, 3), activation='relu'),

tf.keras.layers.MaxPool2D(pool_size=(2, 2), padding='same'),

tf.keras.layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu'),

tf.keras.layers.MaxPool2D(pool_size=(2, 2), padding='same'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(2, activation='softmax')

])

model.compile(

loss=tf.keras.losses.categorical_crossentropy,

optimizer=tf.keras.optimizers.Adam(),

metrics=[tf.keras.metrics.BinaryAccuracy(name='accuracy')]

)

####################

# 3. Training

####################

time_start = datetime.now()

model.fit(

train_data,

validation_data=valid_data,

epochs=5

)

time_end = datetime.now()

print(f'Duration: {time_end - time_start}')

Let’s go over the transfer learning code next.

Transfer Learning TensorFlow Model - The Code

Much of the imports and data loading code is the same. Once again, use only a single pair of train_datagen and valid_datagen at a time:

import os

import warnings

from datetime import datetime

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

warnings.filterwarnings('ignore')

import numpy as np

import tensorflow as tf

tf.random.set_seed(42)

# COLAB ONLY

from google.colab import drive

drive.mount('/content/drive')

####################

# 1. Data loading

####################

# USED ON A TEST WITHOUT DATA AUGMENTATION

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

valid_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

# USED ON A TEST WITH DATA AUGMENTATION

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0,

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest'

)

valid_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1/255.0

)

train_data = train_datagen.flow_from_directory(

directory='data/train/',

target_size=(224, 224),

class_mode='categorical',

batch_size=64,

seed=42

)

valid_data = valid_datagen.flow_from_directory(

directory='data/validation/',

target_size=(224, 224),

class_mode='categorical',

batch_size=64,

seed=42

)

####################

# 2. Base model

####################

vgg_base_model = tf.keras.applications.vgg16.VGG16(

include_top=False,

input_shape=(224, 224, 3),

weights='imagenet'

)

for layer in vgg_base_model.layers:

layer.trainable = False

####################

# 3. Custom layers

####################

x = tf.keras.layers.Flatten()(vgg_base_model.layers[-1].output)

x = tf.keras.layers.Dense(128, activation='relu')(x)

out = tf.keras.layers.Dense(2, activation='softmax')(x)

vgg_model = tf.keras.models.Model(

inputs=vgg_base_model.inputs,

outputs=out

)

vgg_model.compile(

loss=tf.keras.losses.categorical_crossentropy,

optimizer=tf.keras.optimizers.Adam(),

metrics=[tf.keras.metrics.BinaryAccuracy(name='accuracy')]

)

####################

# 4. Training

####################

time_start = datetime.now()

vgg_model.fit(

train_data,

validation_data=valid_data,

epochs=5

)

time_end = datetime.now()

print(f'Duration: {time_end - time_start}')

Finally, let’s see the results of the benchmarks.

MacBook M1 Pro vs. Google Colab - Data Science Benchmark Results

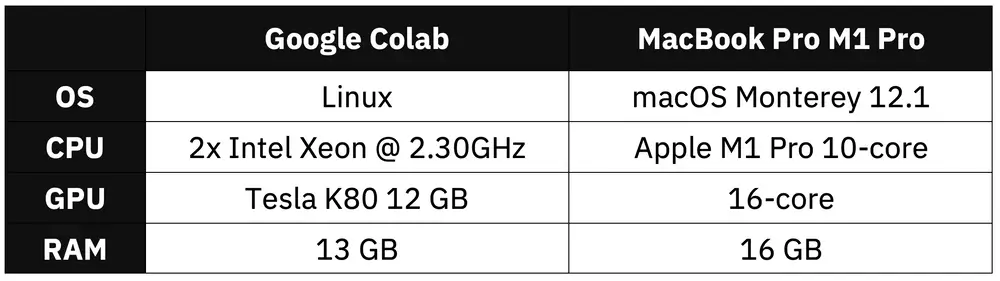

We’ll now compare the average training time per epoch for both M1 Pro and Google Colab on the custom model architecture. Keep in mind that two models were trained, one with and one without data augmentation:

Image 2 - Benchmark results on a custom model (Colab: 87.8s; Colab (augmentation): 286.8s; M1 Pro: 71s; M1 Pro (augmentation): 127.8s) (image by author)

M1 Pro was definitely faster on this TensorFlow test. Without augmentation, M1 Pro was around 23% faster than Google Colab. The difference skyrockets to 124% if we’re talking about a model that uses an augmented image dataset.

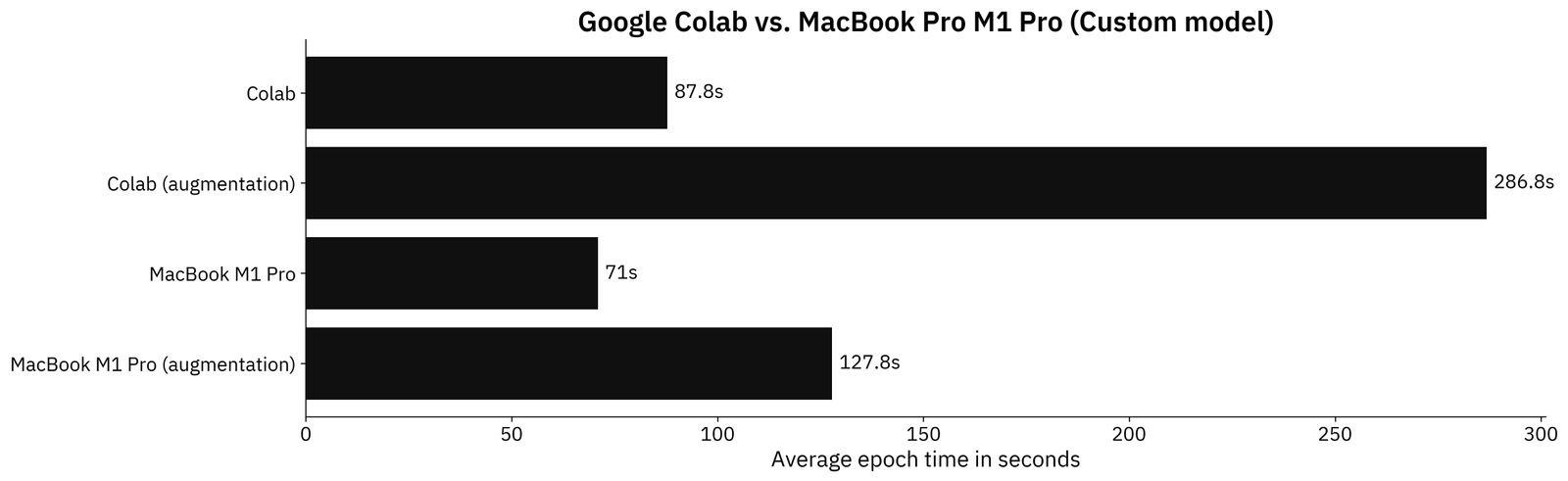

But who writes CNN models from scratch these days? Transfer learning is always recommended if you have limited data and your images aren’t highly specialized. Here are the results for the transfer learning models:

Image 3 - Benchmark results on a transfer learning model (Colab: 159s; Colab (augmentation): 340.6s; M1 Pro: 161.4s; M1 Pro (augmentation): 162.4s) (image by author)

The results are, well, surprising. On the model without augmentation, both Google Colab and M1 Pro MacBook are near identical - only a 2.4-second difference in favor of Colab), which is negligible. We see a slightly higher than 100% training time difference when comparing a model that uses an augmented image dataset.

You now know the numbers, but should you make a purchase decision based on them alone? Let’s discuss.

Parting Words

I’ve spent a lot of money on the 16" M1 Pro MacBook Pro, and the truth is, you can get the same performance on laptops that are half the price or even for free with Colab. I don’t suffer from buyer’s remorse, as M1 Pro has a lot of other things going for it. It’s fast, responsive, light, has a superb screen, and all-day battery life. Heck, it packs a 16.2" screen in a chassis smaller than the most 15" laptops!

M1 Pro or anything from Apple isn’t designed with data scientists and machine learning engineers in mind, but their Pro lineup from 2021 can definitely handle heavy workloads.

What are your thoughts on the best portable data science environment? Going all-in with M1 Pro / M1 Max or buying a cheaper laptop and spending the rest on cloud credits? Or something in between? Let me know in the comment section below.

Stay connected

- Sign up for my newsletter

- Subscribe on YouTube

- Connect on LinkedIn