Generative AI is taking the world by storm. The most disruptive innovation is undoubtedly ChatGPT, which is an excellent free way to see what Large Language Models (LLMs) are capable of producing.

The problem with the free version of ChatGPT is that it isn’t always available and sometimes it gets stuck when writing a response. That’s a small price to pay, taking into consideration what it offers.

Still, people want more and want access to a generative LLM free of charge and even without an Internet connection. Enter GPT4All.

You’ve seen in my previous article what the CLI can do, and today, we’ll focus entirely on the Python API.

Let’s dive in!

Table of contents:

What is GPT4All?

GPT4All is an open-source ecosystem of chatbots trained on massive collections of clean assistant data including code, stories, and dialogue.

It allows you to run a ChatGPT alternative on your PC, Mac, or Linux machine, and also to use it from Python scripts through the publicly-available library.

In my initial comparison to ChatGPT, I found GPT4All to be nowhere near as good as ChatGPT. The responses were short, inconsistent, and the entire experience left a lot to be desired.

Still, it was a semi-viable alternative for those who want a ChatGPT-like solution available at all times, completely free of charge.

The previous article showed you how to get started, and today we’ll shift our efforts to using the Python client.

Up first, the library installation.

How to Install GPT4All Python Library?

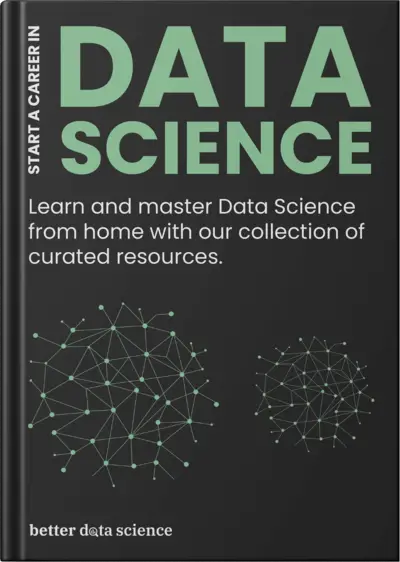

You can install the GPT4All Python library just like you would any else - with the pip install command. Open up a new Terminal window, activate your virtual environment, and run the following command:

pip install gpt4all

This is the output you should see:

Image 1 - Installing GPT4All Python library (image by author)

If you see the message Successfully installed gpt4all, it means you’re good to go!

GPT4All Python library is now installed on your system, so let’s go over how to use it next.

Testing out GPT4All Python API - Is It Any Good?

You can now open any code editor you want. I’m using Jupyter Lab.

The first thing you have to do in your Python script or notebook is to import the library. Copy this line of code to the top of your script:

import gpt4all

You’re good to go if you don’t get any errors. If they occur, you probably haven’t installed gpt4all, so refer to the previous section.

How to Load an LLM with GPT4All

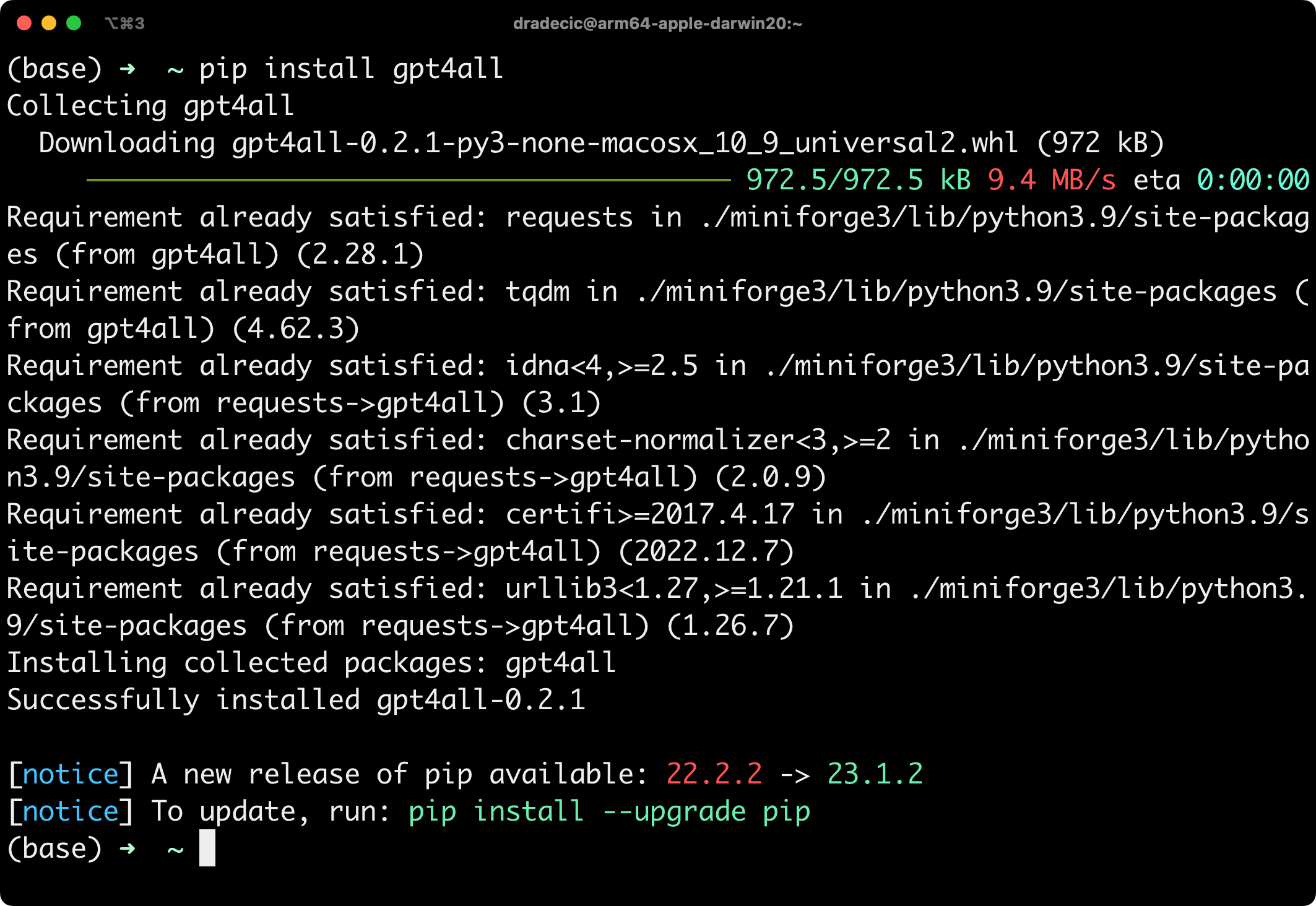

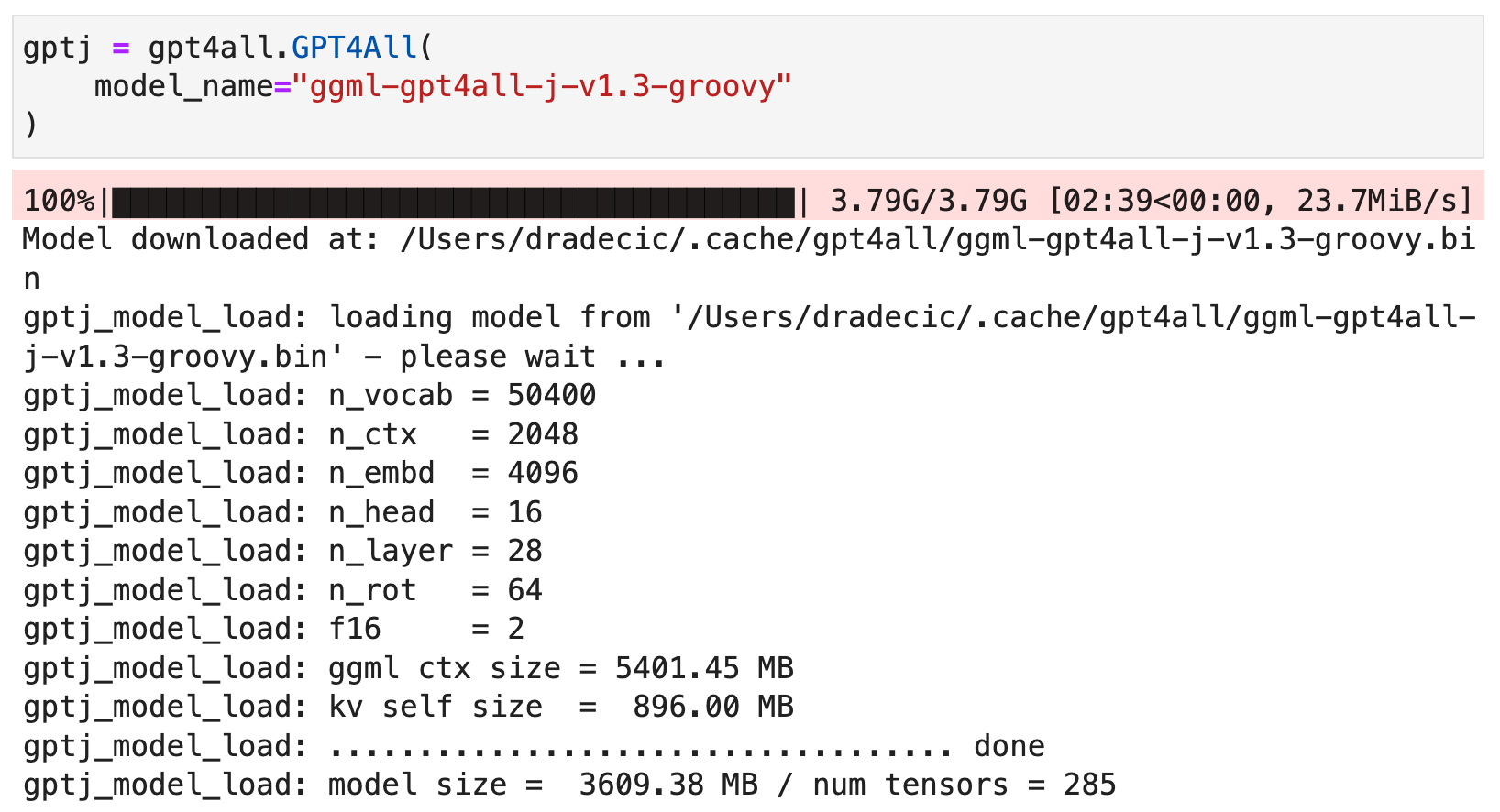

Once you have the library imported, you’ll have to specify the model you want to use. The ggml-gpt4all-j-v1.3-groovy model is a good place to start, and you can load it with the following command:

gptj = gpt4all.GPT4All(

model_name="ggml-gpt4all-j-v1.3-groovy"

)

This will start downloading the model if you don’t have it already:

Image 2 - Downloading the ggml-gpt4all-j-v1.3-groovy model (image by author)

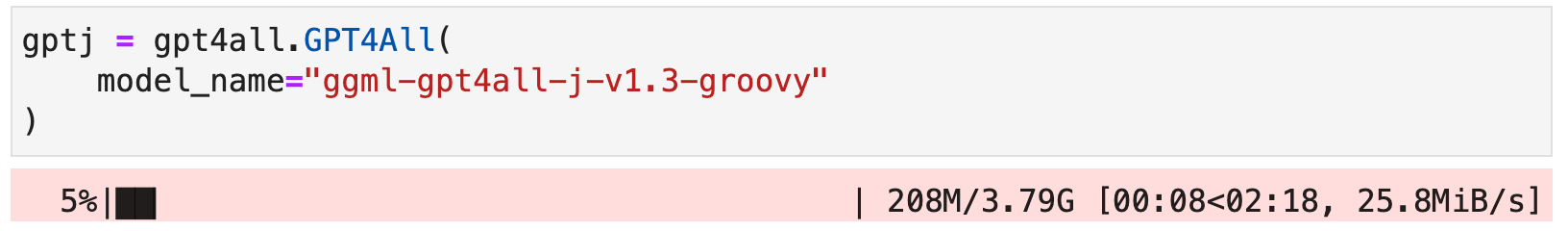

There are many other models to choose from - just scroll down to the Performance Benchmarks section and choose the one you see fit.

Their respective Python names are listed below:

Image 3 - Available models within GPT4All (image by author)

To choose a different one in Python, simply replace ggml-gpt4all-j-v1.3-groovy with one of the names you saw in the previous image.

In the meanwhile, my model has downloaded (around 4 GB). Wait until yours does as well, and you should see somewhat similar on your screen:

Image 4 - Model download results (image by author)

We now have everything needed to write our first prompt!

Prompt #1 - Write a Poem about Data Science

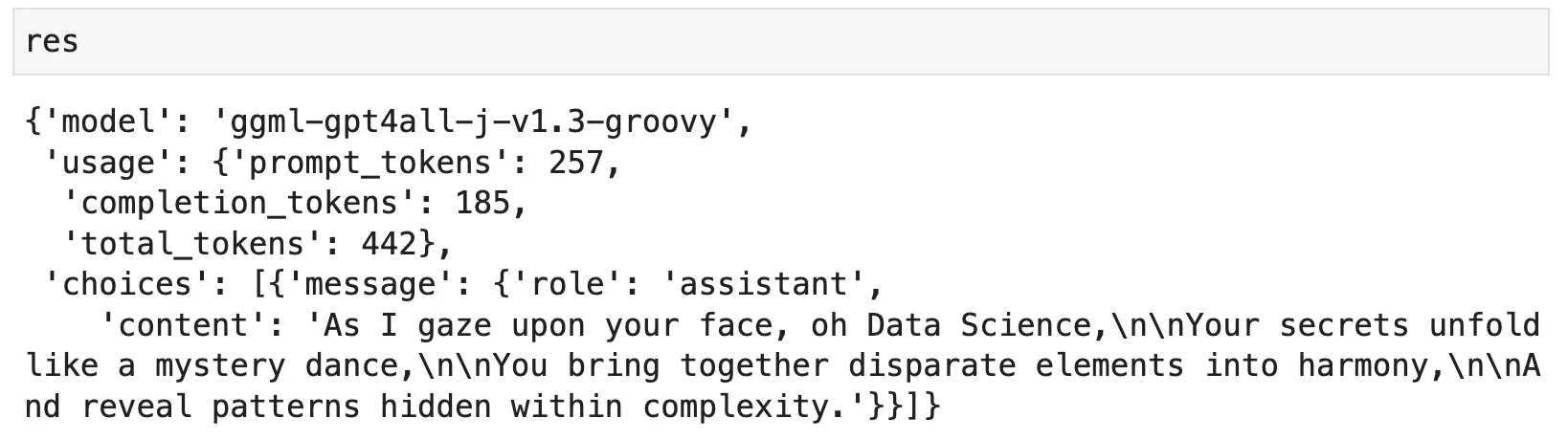

I’ll first ask GPT4All to write a poem about data science. Yes, it’s a silly use case, but we have to start somewhere.

To do the same, you’ll have to use the chat_completion() function from the GPT4All class and pass in a list with at least one message. Take a look at the following snippet to get a full grasp:

messages = [{"role": "user", "content": "Write a poem about Data Science"}]

res = gptj.chat_completion(messages)

res

This is the output you’ll see contained in the res variable:

Image 5 - A data science poem (image by author)

Now, if you want to pretty print the message response only, you’ll have to do a bit of digging with Python nested dictionaries:

print(res["choices"][0]["message"]["content"])

It’s not too bad, and the output now has improved formatting:

Image 6 - Pretty printed data science poem (image by author)

Let’s ask GPT4All another question to see how it performs.

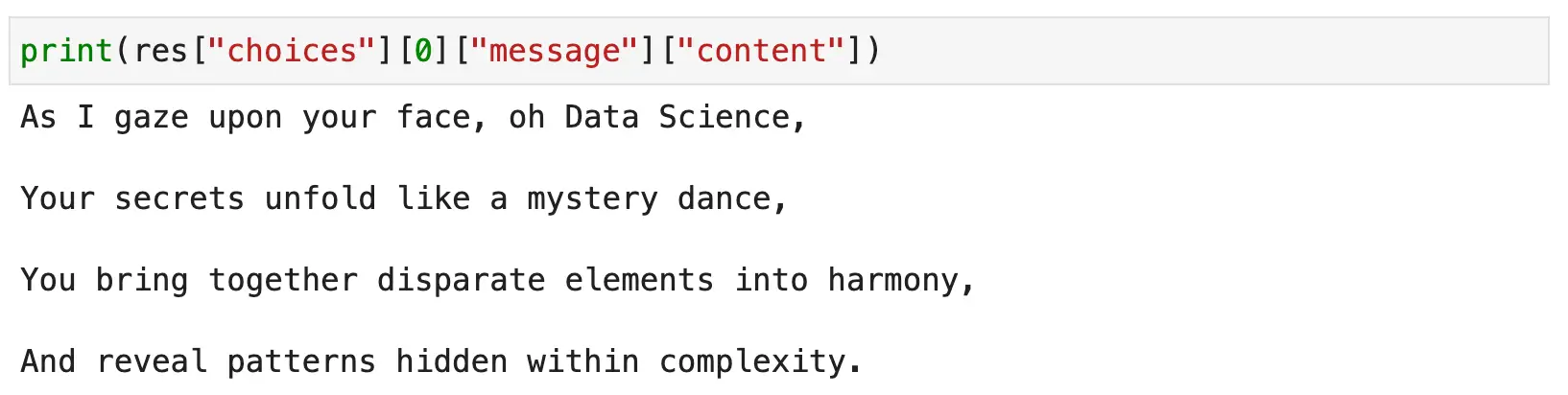

Prompt #2 - What is Linear Regression?

The thing I like about ChatGPT is the response volume. You ask it a simple question, and it often returns paragraphs of well-formatted and easy-to-understand text. It truly is a good place to learn complex topics, even data science!

Will GPT4All even come close? Let’s see.

The following code snippet asks a simple question - What is linear regression?:

messages = [{"role": "user", "content": "What is linear regression?"}]

res = gptj.chat_completion(messages)

The response is correct, but leaves a lot to be desired:

Image 7 - Linear regression explanation (image by author)

You see, ChatGPT would return paragraphs of text and would likely include equations or even Python code. GPT4All did no such thing.

So, is GPT4All a viable ChatGPT replacement? For me, hardly. It can do some things right but gets nowhere near the free version of ChatGPT.

Summing up GPT4All Python API

It’s not reasonable to assume an open-source model would defeat something as advanced as ChatGPT. Still, GPT4All is a viable alternative if you just want to play around, and want to test the performance differences across different Large Language Models (LLMs).

The other consideration you need to be aware of is the response randomness. Sometimes GPT4All will provide a one-sentence response, and sometimes it will elaborate more. Sometimes the response makes sense, sometimes it doesn’t. The same is true with ChatGPT, but I found GPT4All to be vague or incorrect much more frequently.

What are your thoughts on GPT4All? Have you found a viable open-source ChatGPT alternative with a Python API? Let me know in the comment section below.