CSV files for big data storage? Think twice - there are radically better options available. These 5 will save IO time and disk space.

Everyone knows CSV, but is it really the file format you should use 100% of the time? Well, no. It has some obvious benefits, such as direct file editing and ease of understanding, but the drawbacks could cost you thousands of dollars in disk space and I/O time.

Today I’ll show you the top CSV alternatives with detailed explanations, code, and most importantly - numbers. You’ll know precisely how faster alternative file formats are and when should you use them.

What’s Wrong With the CSV Format?

Absolutely nothing. It allows you to edit the file directly without loading them with a programming language such as Python, Also, you can send it directly to a non-tech-savvy client, and they’ll have no trouble opening it and understanding the content.

It’s all sunshine and rainbows until files become too big to share, too big to open in Excel, or so huge you need to upgrade your cloud storage plan.

When it comes to datasets with millions of rows, you shouldn’t even consider saving them in CSV file format. You won’t ever send such a dataset to a non-tech-savvy client, nor will you open it in Excel.

That’s when other file formats or CSV alternatives become a thing. Let’s go over them next.

Top 5 CSV Alternatives That Save Time and Space

I find these file formats much better than CSV when working with huge tabular datasets:

- Pickle

- Parquet

- Feather

- Avro

- ORC

I’ll now explain each in a couple of sentences and show you how to work with them in Python.

Pickle

You can use Python’s pickle library to serialize a Python object into a compact binary representation. It allows you to store, or pickle any Python object, not just Pandas DataFrames. It’s often used to save machine learning models.

The biggest downside of Pickle is that its Python-specific, so cross-language support isn’t guaranteed.

To dump a Pandas DataFrame into a pickle file format, use:

import pickle

with open('path/to/df.pkl', 'wb') as f:

pickle.dump(df, f)

To read a Pickle file, use:

import pickle

with open('path/to/df.pkl', 'rb') as f:

df = pickle.load(f)

Parquet

Apache Parquet is a data storage format designed for efficiency. The reason behind this is the column storage architecture, as it allows you to skip data that isn’t relevant quickly. The best part - Pandas has full native support for Parquet.

To write a Pandas DataFrame to a Parquet file, run:

df.to_parquet('file_name.parquet')

To read a Parquet file into a Pandas DataFrame, run:

df = pd.read_parquet('file_name.parquet')

Feather

Feather is a data format for storing data frames. It’s designed to push data frames in and out of memory as efficiently as possible. Its primary use case is to enable fast communication between Python and R, but you’re not limited to that alone.

To dump a Pandas DataFrame to a Feather file, run:

import feather

feather.write_dataframe(df, 'file_name.feather')

To read a Feather file as a Pandas DataFrame, run:

import feather

df = feather.read_dataframe('file_name.feather')

Avro

Avro is an open-source project which provides services of data serialization and exchange for Apache Hadoop. It stores a JSON-like schema with the data, which you have to write and parse manually first.

To save a Pandas DataFrame as an Avro file, run:

from fastavro import writer, parse_schema

# 1. Define the schema

schema = {

'doc': 'name',

'name': 'name',

'namespace': 'name',

'type': 'record',

'fields': [

{'name': 'a', 'type': 'float'},

{'name': 'b', 'type': 'string'},

...

]

}

parsed_schema = parse_schema(schema)

# 2. Convert pd.DataFrame to records - list of dictionaries

records = df.to_dict('records')

# 3. Write to Avro file

with open('file_name.avro', 'wb') as out:

writer(out, parsed_schema, records)

It’s quite a lot, especially if you have many columns. Reading Avro files and converting them to a Pandas DataFrame is also a three-step process:

from fastavro import reader

# 1. List to store the records

avro_records = []

# 2. Read the Avro file

with open('file_name.avro', 'rb') as fo:

avro_reader = reader(fo)

for record in avro_reader:

avro_records.append(record)

# 3. Convert to pd.DataFrame

df = pd.DataFrame(avro_records)

ORC

ORC stands for Optimized Row Columnar. It’s a data format optimized for reads and writes in Hive. With Python, you can use the read_orc() function from Pandas to read ORC files. There’s no alternative function for writing ORC files, so you’ll have to use PyArrow.

import pyarrow as pa

import pyarrow.orc as orc

table = pa.Table.from_pandas(df, preserve_index=False)

orc.write_table(table, 'file_name.orc')

Reading ORC files is much simpler:

df = pd.read_orc('file_name.orc')

We’ll now set up a Python benchmark script and how these top CSV alternatives for Python and Pandas compare.

Top 5 CSV Alternatives - Benchmark in Python

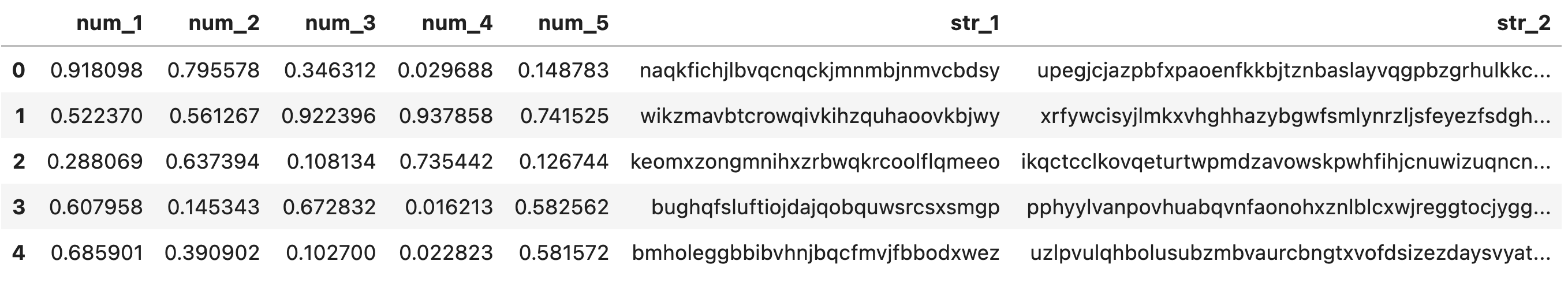

For this benchmark, I’ve decided to create a 5M row Pandas DataFrame with 5 numeric and 2 textual columns. I was using M1 Pro MacBook Pro (10-core CPU, 16 GB RAM) for the benchmark, so your mileage may vary.

Here’s the code snippet if you want to recreate it:

import random

import string

import numpy as np

import pandas as pd

np.random.seed = 42

def get_random_string(length):

letters = string.ascii_lowercase

return ''.join(random.choice(letters) for i in range(length))

df_size = 5_000_000

df = pd.DataFrame({

'num_1': np.random.rand(df_size),

'num_2': np.random.rand(df_size),

'num_3': np.random.rand(df_size),

'num_4': np.random.rand(df_size),

'num_5': np.random.rand(df_size),

'str_1': [get_random_string(length=32) for x in range(df_size)],

'str_2': [get_random_string(length=128) for x in range(df_size)]

})

And here’s what the dataset looks like:

Image 1 - Synthetic 5M row dataset (image by author)

Next, let’s take a look at the benchmark results - both for file save/read, and the file size.

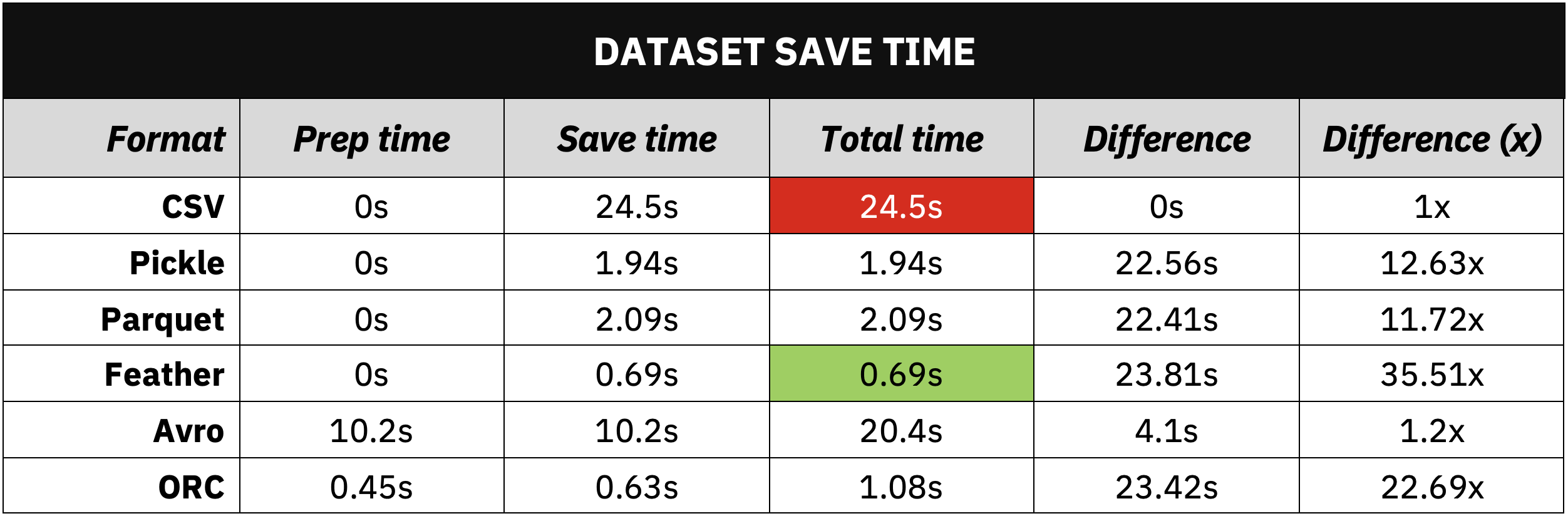

Benchmark result - Dataset save time

Saving a 5M row dataset to a CSV File took the most time - 24.5 seconds. CSV alternatives such as Feather took only 0.69 seconds, which is 35 times faster:

Image 2 - Dataset save time comparison (image by author)

You can see this pattern repeat among most of the top CSV alternatives. Avro file format was closest to CSV in save time, only because parsing the schema and converting the dataset to a dictionary took some time (prep time column).

Let’s see how the read times compare.

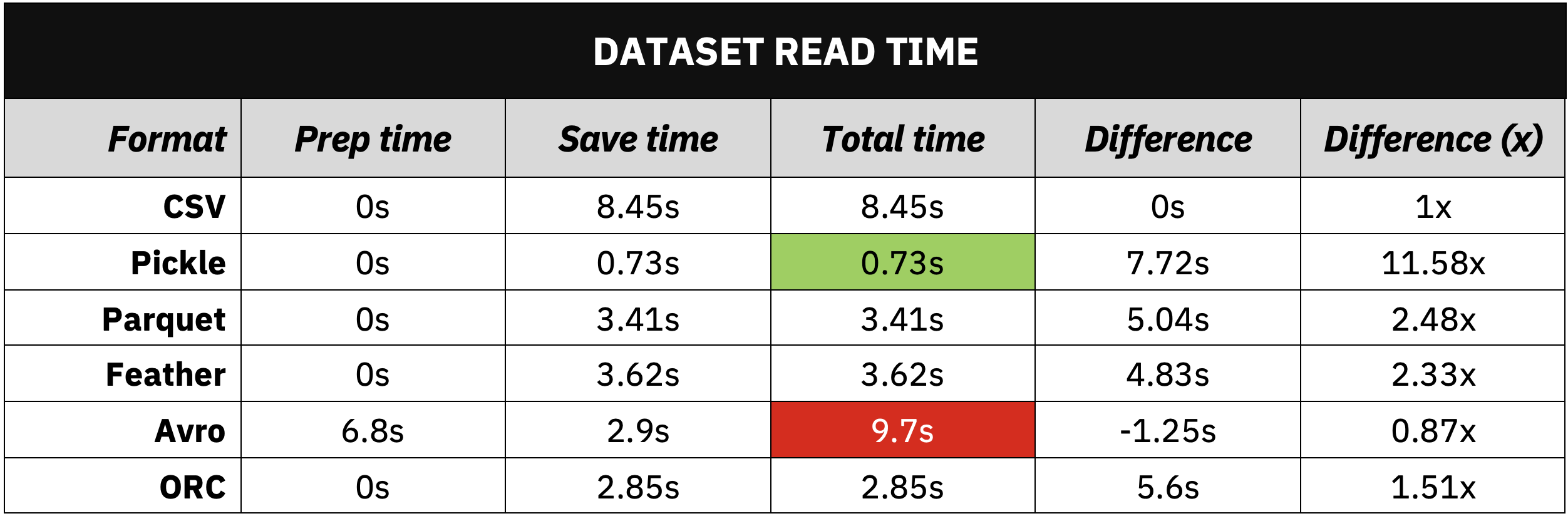

Benchmark result - Dataset read time

We don’t see as significant of a difference in dataset read time. Pandas took 8.45 seconds to read a 5M row CSV file, and alternatives such as Avro were even slower if we account for the prep time:

Image 3 - Dataset read time comparison (image by author)

Pickle file format stands out here, but it’s a Python-specific data format, which is why you don’t see it commonly used in a multi-language environment. Other than that, Parquet looks promising with around 2.5x read time reduction.

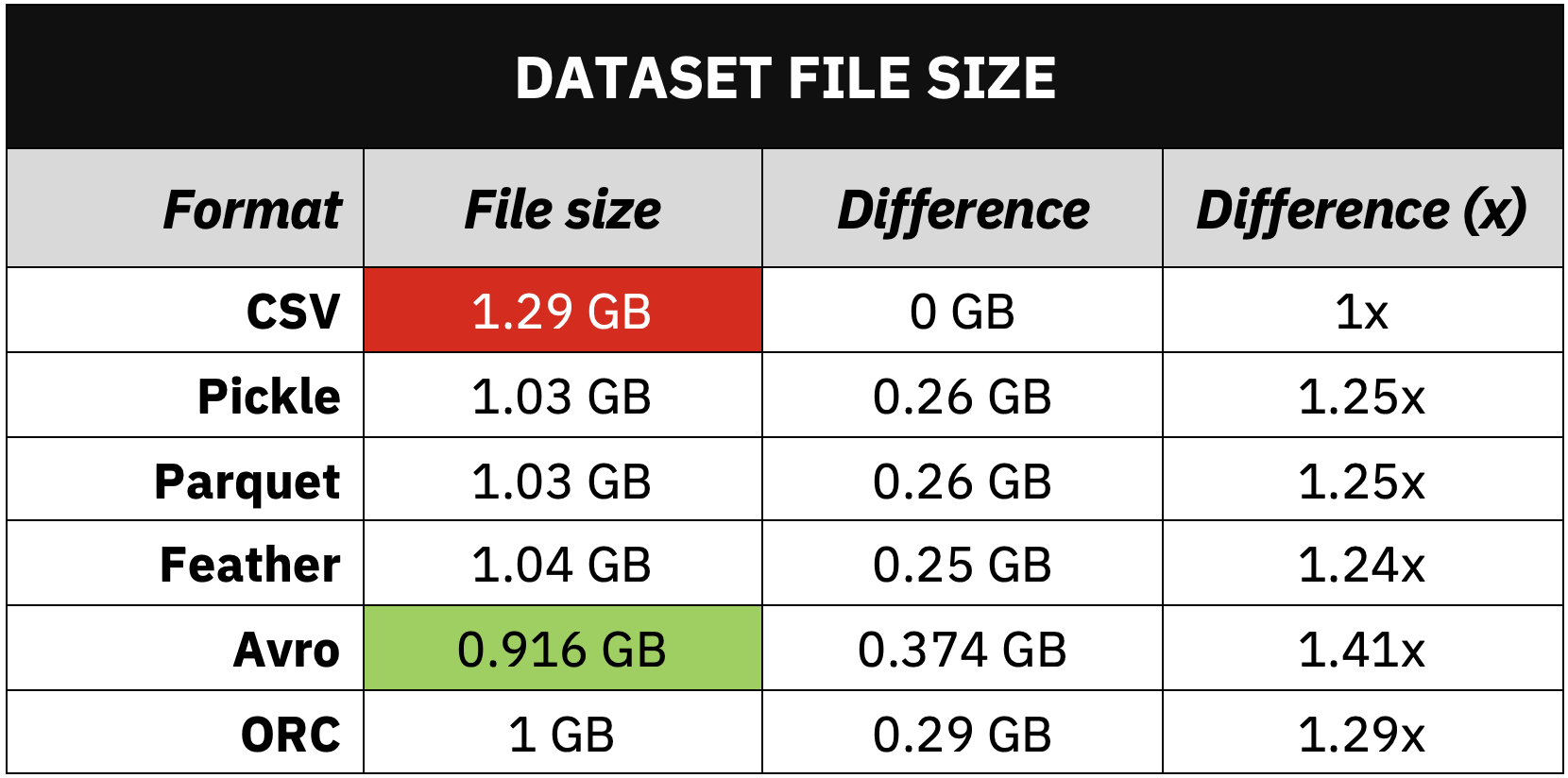

Benchmark result - Dataset file size

And finally, let’s take a look at the file size for each top CSV alternative:

Image 4 - Dataset file size comparison (image by author)

The differences aren’t huge here, but most of the alternatives offer around 20%-25% reduction in file size. Avro was a clear winner here, so use this file format if small file sizes are preferred, and you don’t care about IO speed.

Summing Up Top CSV Alternatives for Data Science

CSV file format isn’t going anywhere. It’s still the most common one (after XLSX) if you need to share your data with business clients that expect to examine it in Excel. Most business users have no idea what Avro or Parquet mean, so stick to CSV in this scenario.

Otherwise, if you’re paying for cloud compute resources and storage, optimizing how you store data can save you a ton of money in the long run. Use Pickle if you know you’ll use Python only, go with Avro if small file size is crucial, or opt for alternatives such as Parquet, ORC, or Feather for the best balance between file size and IO speeds. Pretty much everything is better than CSV.

What’s your favourite file format for storing large datasets? Let me know in the comment section below.