Spark SQL - From basics to Regular Expressions and User-Defined Functions (UDF) in 10 minutes

DataFrames in Spark are a natural extension of RDDs. They are really similar to a data structure you’d normally see in Pandas, but with one additional functionality - you can manipulate them directly with SQL. That’s right, you can use the language of data to work with huge datasets locally and on a cluster.

Today we’ll keep things simple and explore the basics of Spark SQL. You’ll first learn how to avoid SQL altogether by using function calls. I like to use SQL whenever possible, so most of the article will revolve around aggregation functions, conditional logic, regular expressions, and user-defined functions - all in SQL.

Don’t feel like reading? Watch my video instead:

Dataset Used

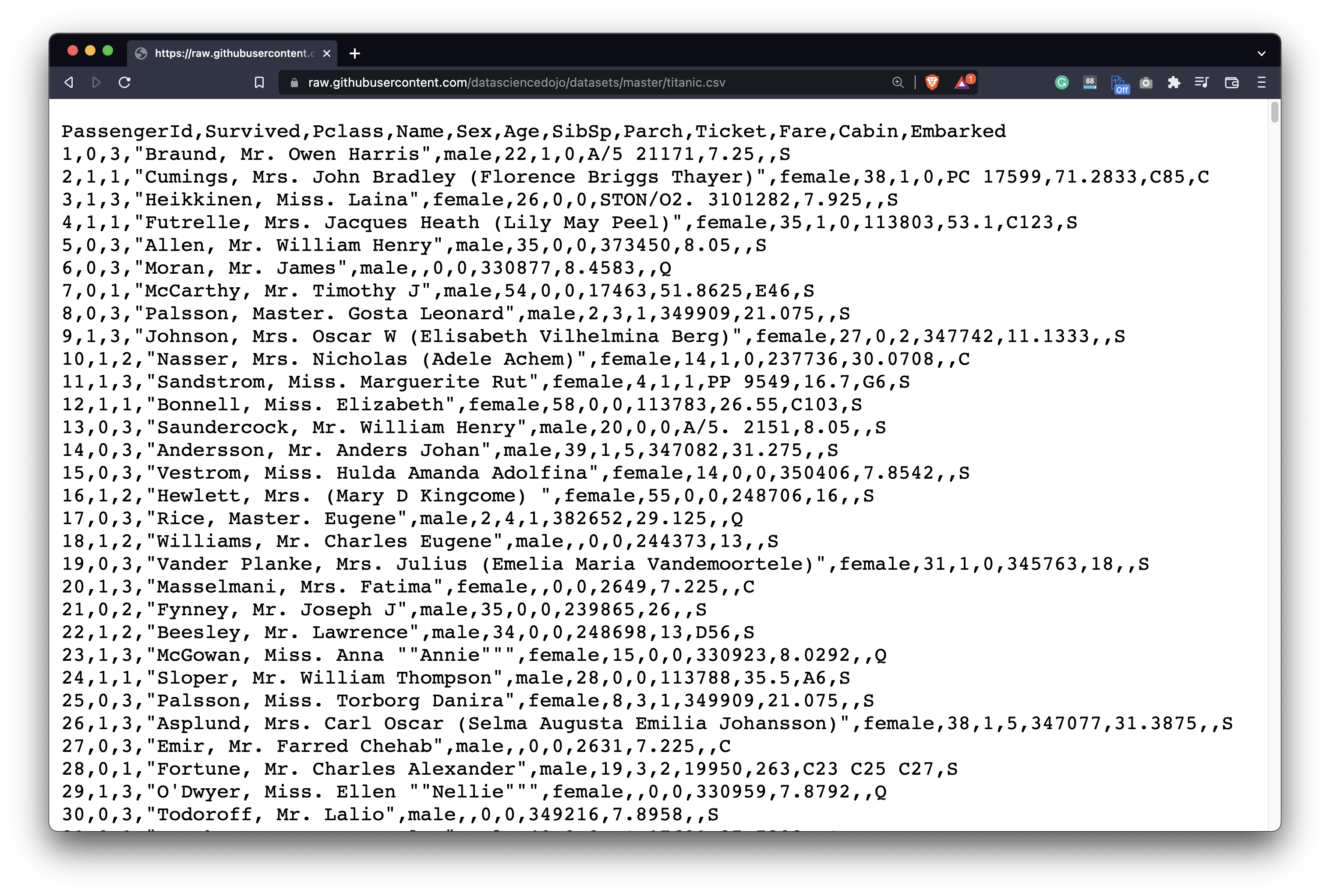

To keep things extra simple, we’ll use the Titanic dataset. Download it from this URL and store it somewhere you’ll remember:

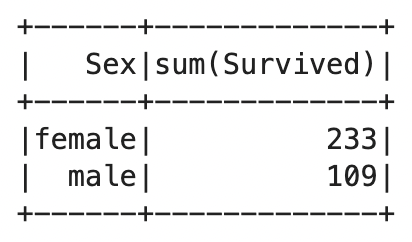

Image 1 - Titanic dataset (image by author)

The dataset packs much more features than, let’s say, Iris dataset. These will come in handy later for user-defined functions and regular expressions. But first, let’s see how to load it with Spark.

How to Read CSV File with Spark DataFrame API

If you’re working with PySpark in a notebook environment, always use this code snippet for better output formatting. Otherwise, the dataframes are likely to overflow if there are too many columns to see on the screen at once:

from IPython.core.display import HTML

display(HTML("<style>pre { white-space: pre !important; }</style>"))

When that’s out of the way, we can initialize a new Spark session:

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("spark-sql").getOrCreate()

To read a CSV file, simply specify the path to the csv() function of the read module. The inferSchema and header parameters are mandatory whenever reading CSV files. Without them, Spark will cast every data type to string and treat the header row as actual data:

titanic = spark.read.csv(

path="file://<dataset-path>",

inferSchema=True,

header=True

)

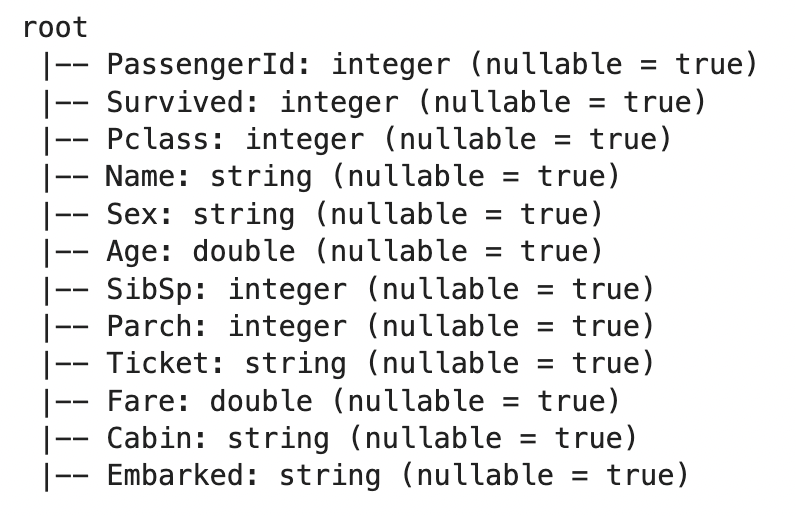

titanic.printSchema()

Image 2 - Inferred schema of the Titanic dataset (image by author)

The data types look correct, so let’s print the first 10 rows to verify everything works as expected:

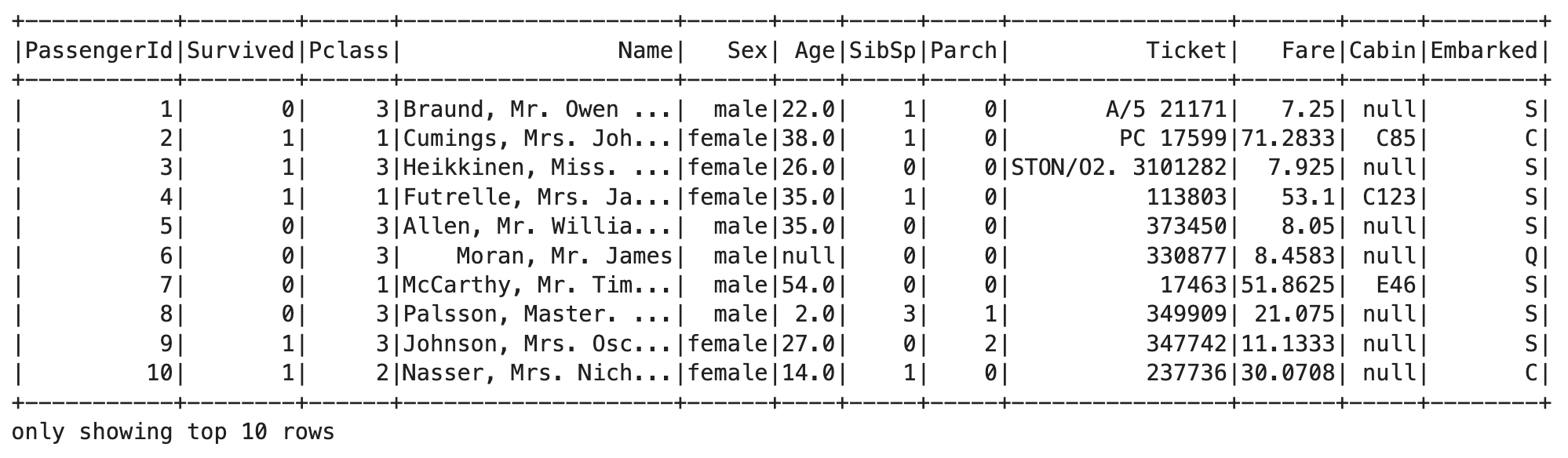

titanic.show(10)

Image 3 - First 10 rows of the Titanic dataset (image by author)

That’s all we need to start analyzing the Titanic dataset.

Data aggregations with Spark function calls

You don’t need to use SQL to work with Spark DataFrames. There’s a dedicated function for everything. For example, you can use the select() function to isolate any columns of interest:

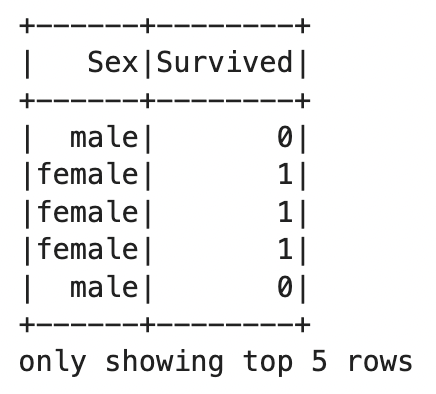

titanic.select("Sex", "Survived").show(5)

Image 4 - Selecting columns with Spark’s DataFrame API (image by author)

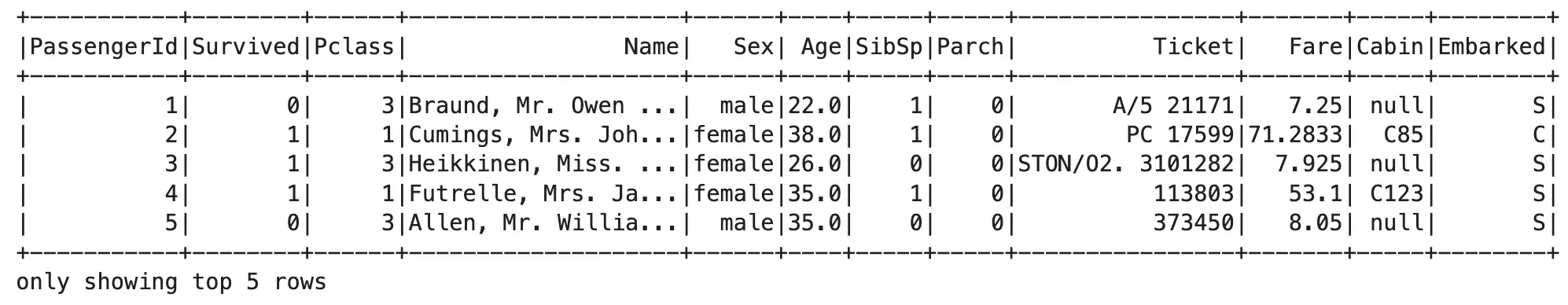

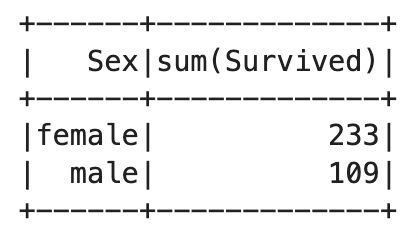

From there, you can also aggregate the dataset by grouping on a categorical variable and passing an aggregate function. The example below shows how many passengers survived per gender:

titanic.select("Sex", "Survived").groupBy("Sex").sum().show()

Image 5 - Aggregate calculations in Spark (image by author)

Moving forward, you have two options. You can either continue chaining functions or redeclare a variable multiple times for more readability. If neither sounds like fun, you should definitely try SQL syntax.

How to Use Spark SQL to Query Spark DataFrames

Before you can use SQL in Spark, you’ll first need to create a temporary view. That’s done by calling createOrReplaceTempView() function on a DataFrame, and passing in a view name (arbitrary one).

Once created, pass any SQL statement in a call to spark.sql():

titanic.createOrReplaceTempView("titanic")

spark.sql("SELECT * FROM titanic").show(5)

Image 6 - First 5 rows of the Titanic dataset (image by author)

Recreating the DataFrame from the previous section is much cleaner to do in SQL, provided you know how the GROUP BY clause works:

spark.sql("""

SELECT

Sex,

SUM(Survived)

FROM titanic

GROUP BY Sex

""").show()

Image 7 - Aggregate calculations with Spark SQL (image by author)

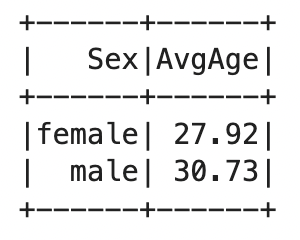

Easy, right? We can calculate the average age per gender in a similar manner. The ROUND() function will make the result more readable by rounding it to two decimal places:

spark.sql("""

SELECT

Sex,

ROUND(AVG(Age), 2) AS AvgAge

FROM titanic

GROUP BY Sex

""").show()

Image 8 - Aggregate calculations with Spark SQL (2) (image by author)

Conditional logic with the CASE operator

The Cabin column is quite problematic. Its most frequent value is NULL, indicating the passenger didn’t have a cabin, or that a cabin for a given passenger isn’t known.

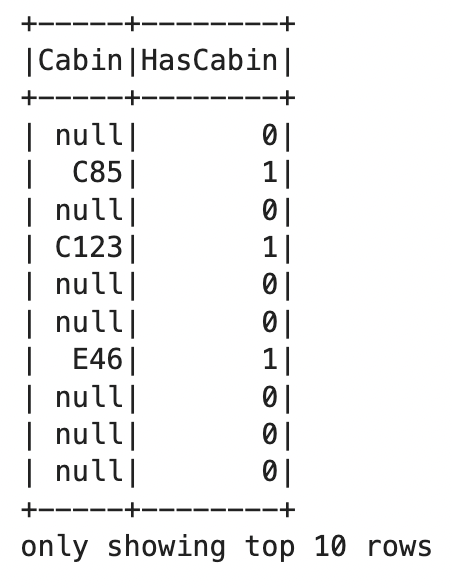

We can use SQL’s CASE operator to make this column useful. The example below creates a column HasCabin that has a value of 1 if the Cabin is not NULL, and 0 otherwise:

spark.sql("""

SELECT

Cabin,

CASE

WHEN Cabin IS NOT NULL THEN 1

ELSE 0

END AS HasCabin

FROM titanic

""").show(10)

Image 9 - Conditional logic in SQL queries (image by author)

Feature engineering with Regular Expressions

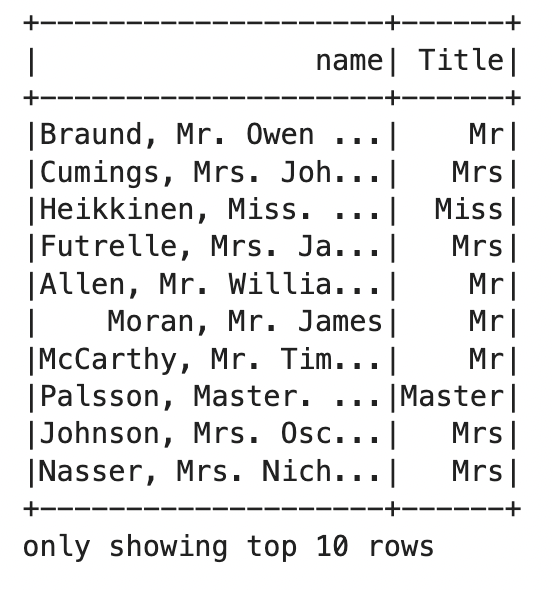

Regular expressions sometimes feel like a foreign language, but they have many use cases in data science and data analytics. For example, the Name column has a title attached to each passenger after the first comma sign. We can use regular expressions in Spark SQL to extract it:

spark.sql("""

SELECT

name,

REGEXP_EXTRACT(name, ' ([A-Za-z]+)\.') AS Title

FROM titanic

""").show(10)

Image 10 - Extracting passenger title with regular expressions (image by author)

The hardest part here is coming up with appropriate regular expressions. There are a ton of them available online for different use cases, such as phone numbers and email addresses, so keep that in mind. No need to reinvent the wheel.

How to use User Defined Functions (UDF) in Spark SQL

Spark SQL doesn’t ship with a function you need? That’s where User Defined Functions come into play. In PySpark, you can create a Python function and wrap it with PySpark SQL udf() to register it as a User Defined Function.

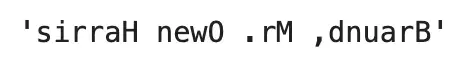

We’ll keep things simple today and declare a Python function that reverses a string. This function will be used later to reverse a name. Not particularly useful functionality, but that’s not the point.

Here’s a declaration and usage example of the function:

def reverse_name(name):

return name[::-1]

reverse_name("Braund, Mr. Owen Harris")

Image 10 - Reversing a string with Python (image by author)

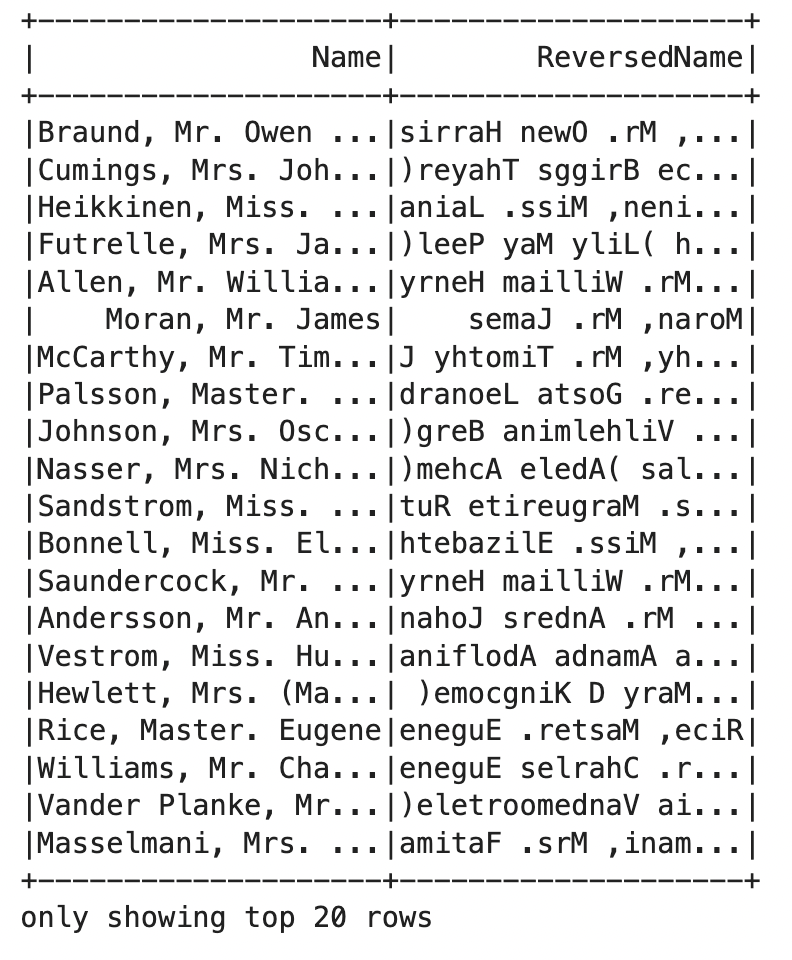

To use it in PySpark, you’ll first have to register a Python function as a User Defined Function. The first parameter specifies the name you’ll use in SQL, and the second parameter is the Python function itself.

Once registered, you’re free to use it in Spark SQL:

spark.udf.register("reverse_name", reverse_name)

spark.sql("""

SELECT

Name,

reverse_name(Name) AS ReversedName

FROM titanic

""").show()

Image 12 - Using UDFs in Spark SQL (image by author)

It doesn’t get easier than that. User Defined Functions are a topic worth discussing in a separate article, so I won’t go any further today.

Summary of Spark SQL in Python

And there you have it - the basics of Spark SQL in only a couple of minutes. You’ve learned a lot - from reading CSV files to feature extraction with regular expressions and user-defined functions. It’s a lot to process at once, so feel free to go over the materials until they sink in.

User-defined functions make anything possible in Spark. Even if you don’t know how to do something in SQL, you can easily wrap the logic in a Python function, and use UDFs in your SQL statements. It’s definitely an area we’ll explore in future articles.

Up next, we’ll rewrite the word count solution from RDDs to DataFrames, so stay tuned.

Recommended reads

- 5 Best Books to Learn Data Science Prerequisites (Math, Stats, and Programming)

- Top 5 Books to Learn Data Science in 2022

- 7 Ways to Print a List in Python

Stay connected

- Hire me as a technical writer

- Subscribe on YouTube

- Connect on LinkedIn